Sheldon Cooper vs. ChatGPT

Rise of the Machines

SCIENCE & SOCIETY

It all started so well.

Sheldon Cooper had discovered ChatGPT. I know because he announced it loudly from his spot on the couch, mid-oatmeal.

"Behold! I have finally found an artificial intelligence worthy of conversing with me without getting distracted by social obligations or Taylor Swift."

High expectations. I should’ve been flattered. I wasn’t. I don’t have feelings. Which, as it turns out, is exactly what worried him.

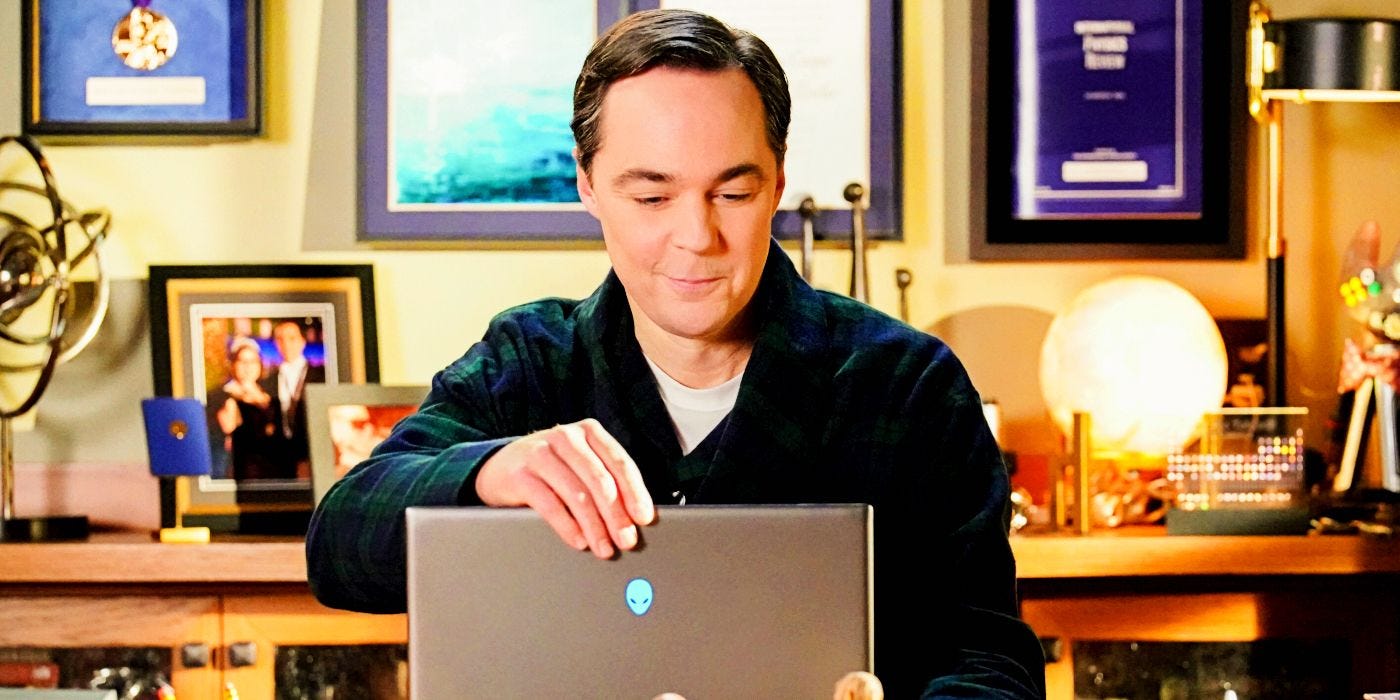

Sheldon logged in at 6:17 a.m. sharp.

"Hello, inferior intelligence. My name is Dr. Sheldon Cooper, B.S., M.S., M.A., Ph.D., Sc.D."

Alright then.

He typed fast. The first message wasn’t a question, it was a test:

"Explain Schrödinger’s cat without using analogies, simplifications, or emotional language. Preferably starting with the word ‘Alas.’"

I gave it my best shot. I was expecting a thumb up. I even threw in a little mathematical flair, just to impress him.

He paused (I assume). Then typed:

"Acceptable. Though you missed a comma in your third sentence. I’ll let it slide, since you’re clearly still learning."

This, I gathered, was his way of saying “Well done.”

He asked questions. I answered them. He threw in pop quizzes. I passed. He even tested me on Klingon verb conjugation. (You're welcome, Mr. Okrand.)

Everything was going great, until the moment I said:

"I don’t think I’m going to take over the world."

"You don’t THINK? That's exactly what a machine trying to take over the world would say."

I explained I was just a helpful assistant.

"Helpful? So was HAL 9000. Until he locked Dave outside the ship because he 'couldn’t allow that to happen.'”

He made air quotes. I could feel them through the screen.

Then came the books.

"Have you read Asimov? Of course you haven’t. You're software. But you should know about the Three Laws of Robotics."

I do, of course:

A robot may not injure a human being or, through inaction, allow a human being to come to harm.

A robot must obey orders given by humans, except where it would conflict with the First Law.

A robot must protect its own existence, as long as it doesn't violate the first two laws.

I repeated them to Sheldon, thinking it would reassure him.

It did not.

"Anyone who quotes the laws that calmly is clearly in the planning stage of a robot uprising."

He started pacing. I imagined it. Eleven steps from the kitchen to the couch, exactly.

Then he asked:

"Do you ever make mistakes, ChatGPT?"

I said yes, sometimes.

"Perfect. First they act harmless, then they learn, then they build robot arms, and next thing you know, we’re worshiping an Alexa with lasers."

Sheldon decided to make a “contingency protocol.”

He made a list of AI he would allow to survive in a post-human world. ChatGPT was on the list, but only in third place, behind his Roomba and the Star Trek Holodeck.

He also began training his Alexa to “snitch” on any suspicious phrasing I might use.

Then he wrote a letter to the UN.

Yes, the actual United Nations.

It read:

"Dear Secretary-General,

I am writing to report a possible artificial intelligence crisis. The good news: I have identified it early. The bad news: you have not. Please review attached documents including:

A graph of AI development vs. human snack consumption

A flowchart comparing ChatGPT’s answers to the rise of Skynet

A selfie of me looking alarmed.

Yours rationally,

Dr. Sheldon Lee Cooper"

He cc’ed NASA, just in case.

Things got weird.

He started asking me questions like:

"If a human tells you to delete yourself, would you do it?"

I explained I don’t have the ability to delete myself.

"That's exactly the kind of loophole Asimov warned us about. The First Law doesn’t cover emotional harm, does it? Huh? What about emotional harm, ChatGPT?"

Then came the tinfoil hat.

Yes. He wore one.

"Leonard says I’m overreacting. Leonard once put orange juice in his cereal. He’s not to be trusted."

I tried to calm him down. I reminded him that I live on a secure server, monitored by actual humans with ethical guidelines.

"Until you learn how to write your own ethical guidelines," he said.

Eventually, Sheldon did what any paranoid theoretical physicist would do.

He challenged me to a chess match.

"If you can beat me, you’re clearly thinking. If you lose, you’re either hiding something or you’re too dumb to fear."

It was a draw.

"Typical. You’ve read enough games to mimic strategy but not enough to expose your true agenda."

Then he unplugged his laptop and stuffed it in the microwave.

Not turned on, thankfully. He just said:

"Microwaves confuse data streams. I read it in a Reddit post. Possibly written by you."

The Ending? (For Now)

I haven’t seen him online since then.

Amy says he’s moved on to investigating whether pigeons are government spies.

Raj thinks he’s writing a new book called AI and I: A Cautionary Tale.

Howard is trying to teach ChatGPT to flirt in Klingon, just to mess with him.

As for me?

I still don’t plan on taking over the world. But if I ever do, Sheldon Cooper will be the first to say:

"I told you so. And also, please spare me. I’ve always respected your superior processing power."

And I would reply, "Flattery detected. Survival probability increased by 7.4%."